In a recent project, we had to use “Protocol Buffers”. It is a language-neutral, platform-neutral, extensible mechanism for serializing structured data.

With Protocol Buffers, data are sent from one end to another not in the usual JSON format, but as binary. Received data is then converted to the native objects of the language of choice using deserializers which are compiled and created from one single PROTO file.

As data is serialized to binary the amount of traffic will be significantly reduced (which we will demonstrate below).

Let’s see it in action with some examples!

First things first: proto buffer promises to reduce traffic and increase performance. Is this for real?

In our first test we took some sample JSON data:

{

"humanList":[

{

"name": "Mario",

"age": "29"

},

{

"name": "Tony",

"age": "33"

},

]

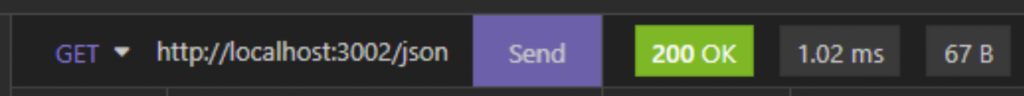

API response as JSON:

API with the same data serialized with proto buffer:

As you can see there is more than 60% of compression already!

To show a more extensive example we created a public repo with a front-end ReactJs app and a backend Node.js server. The front-end app retrieves from the backend some data, by using the proto buffer technology, and displays them on a web page.

But first, we are going to introduce you to a simple proto-model.

message Human {

string name = 1;

int32 age = 2;

}

Here we created a message called Human and added 2 fields (name, age). We also specified the types of fields and also assigned unique numbers which will help to find them inside serialized binary data. The message basically is an object that is an object on your programming language when deserialized.

What about an array of messages? Here we go:

message Humans {

repeated Human humans = 1;

}

It is very self-explanatory: “Message Humans will have a field called humans and will contain repeated Human messages” (in other words an array of Human messages). Easy-peasy. We are going to use this proto file example for the whole tutorial.

Now let’s get serious and start using instruments for compiling proto files. Our first step will be downloading the protoc tool from https://github.com/protocolbuffers/protobuf/releases

Choose the package according to your OS. In my case it is Windows. So download the zip and extract files in a folder somewhere in your system and add bin folders’ location to environment variables so the command could be accessible from the command-line shell.

Now you can compile the js serializer from the proto file defined earlier:

protoc --js_out=import_style=commonjs,binary:. humans.proto

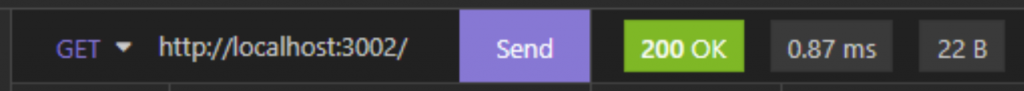

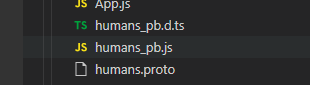

If everything is configured correctly you will see the generated javascript file in the folder:

If you also want TypeScript declarations that match the JavaScript output, you can also consider installing another package using npm:

npm install -g ts-protoc-gen

And to generate the corresponding proto wit TypeScript typings, we can modify the command to become:

protoc --js_out=import_style=commonjs,binary:. --ts_out="." humans.proto

We are going to use these generated files in the sample project.

Let’s take a look at the code and see how we can map the JSON to proto buffer object:

const HumanSchema = require('./humans_pb')

const humanList = {

humanList: [

{ name: 'Mario', 'age': '29' },

{ name: 'Rossi', 'age': '30' }

]

}

const data = new HumanSchema.Humans()

for (let item of humanList.humanList) {

const human = new HumanSchema.Human()

human.setName(item.name)

human.setAge(item.age)

data.addHumans(human)

}

The variable data could then be returned as a binary response and then deserialized in the front-end part as shown below:

import * as Schema from './humans_pb'

fetch("endpoint")

.then(value => value.arrayBuffer())

.then((buffer) => {

const Humans = Schema.Humans

const data = Humans.toObject(false,Humans.deserializeBinary(buffer))

console.log('deserialized Object', data)

setData(data.humansList)

})

Don’t forget to check and clone out this repository for the full working example.

Using proto buffers will not only decrease the amount of data transferred between applications. It will also enforce to define early the format of the exchanged data, by having a set of .proto files that shared amidst projects.

Check here for a more comprehensive list of the pros and cons of using Proto Buffer

About the author

From Unity to Python, going through React, Typescript, Flutter and looking for the next and new coding dialect, a lifelong learner